On January 4, 2023, CircleCI, a continuous integration (CI/CD) and delivery service, reported a data breach. The company urged its customers to take immediate action while a complete investigation is ongoing. First critical actions recommended by CircleCI were to ‘rotate any and all secrets stored in CircleCI’ and ‘review related activities for any unauthorized access starting from December 21, 2022 to January 4, 2023’.

Why is it such a big deal

Malicious use of access keys in conjunction with privileged access can have a significant impact on an organization’s source code, deployment targets, and sensitive data across its infrastructure.

CI/CD pipelines operation requires exactly that – high-privileged access which in most cases is administrative and direct access to source code repositories essential for smooth operation – and as such, considered a critical component of the software development life cycle (SDLC).

Start investigating for malicious activity in your cloud environment

Data breaches are unfortunately common and should no longer be a surprise. Every third-party service or application has the potential to act as a supply chain vector by an attacker. When that occurs, excessive access that was previously benign can become a critical exposure, allowing the threat actor to exploit the system freely.

Here are immediate next steps security and DevOps teams should take to eliminate any possible supply chain

risk – those recommended by CircleCI and beyond:

- Discover possible entry points – Critical first step involves mapping, linking and reviewing the access of all secrets given to the compromised third-party service to fully understand all initial access attempts and possible lateral movement across all supply chain vectors.

Specific to CircleCI data breach, Rezonate observed that multiple accounts had a few AWS programmatic access keys with administrative privileges in the CircleCI configuration, allowing for creation and modification of any resource within the account.

- Threat containment (& traps) – Once you identify any and all keys, the first option is to deactivate or delete them and create new ones (avoid rotating an unused key). However, while you prevent any future use of these keys, you also limit any potential traces of benign or malicious activity. Why? In the case of AWS, Cloudtrail has limited authentication logging for invalid or disabled keys.

A second more preferred option is to remove all privileges from users while keeping the keys and users active. This enables further monitoring of activity using ‘canary keys,’ where every access attempt triggers an alert and extracts threat intelligence artifacts (IOCs such as IP address).

- Activity review & behavioral profiling – Once you capture all suspected keys, you can begin analyzing their activity within the defined range reported. In our case, we used AWS Cloudtrail as the main data source and investigated the access behavioral patterns. The goal is to create a ‘clean’ baseline of activities that occurred prior to the breach. To help define a profile, understand the scope, and identify any potential areas of concern, consider asking the following questions:

- Reduce the overwhelming number of insignificant incident alerts and the time spent addressing them

- Increase operational visibility into cloud identity and access security across platforms

- Discover and monitor third party cross-cloud access

- Limit permissions and restrict access to the minimum users required without any impact to operations.

Once we have a good understanding of normal operation, we can apply the same approach to inspect activities from the date of breach until the present. In this case, the context of workflows, resources, and overall architecture is paramount, so it is critical to collaborate with the dev/infra team to quickly assess, validate, and prioritize findings.

- Activity review & threat models – Based on the results of previous steps, further questions may indicate a potentially malicious exploitation, such as attempts to elevate privileges, gain persistence, or exfiltrate data. To help pinpoint the most relevant findings, consider the following options:

| Activities performed outside of our regular regions | Alerting for anomaly of regular access in an attempt to locate compromised resources |

| Identity-creation activities (ATT&CK TA0003) | Activities such as CreateUser and CreateAccessKey attempting to gain persistency |

| Resource-creation activities | Discover attempts to perform resource exhaustion for crypto mining and others |

| Activities performed outside of the regular CircleCI IP ranges | Identify any access attempts from external IPs that may relate to known bad intel |

| Errors occurred | Detect “pushing the limits” attempts to exploit user privileges resulting in error (e.g. AccessDenied) |

| Spike in enumeration activities (ATT&CK T1580) | Detect increased recon and mapping actions (e.g. user and role listing) |

| Defense evasion techniques (ATT&CK TA0005) | Detect tampering attempts to limit defense controls (e.,g. DeleteTrail or modify GuardDuty settings) |

| Secret access attempts | Detect bruteforce actions against mapped secrets to elevate account foothold |

It’s important to consider all suggested actions as part of the overall context, as some may be legitimate while others may be malicious. By correlating them all together, you can reduce noise and false positives.

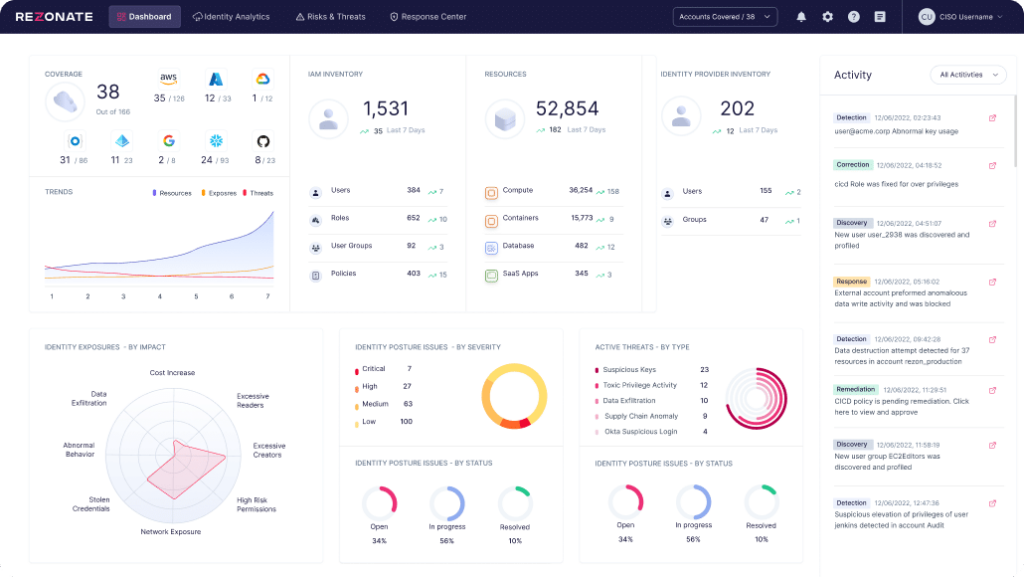

How Rezonate can help

It’s important to note that while this guidance specifically addresses key actions related to the CircleCI data breach, it can also serve as best practice for addressing any risks for any breach.

Rezonate automates the actions described above to streamline the compromise assessment process and reduce the time and effort required for manual analysis. Rezonate simplifies discovery, detection, and investigation of the compromise.

Work with a system that can automatically correlate and summarize all activities of all identities to save critical time. Working directly with CloudTrail can be challenging, lacking aggregation, data-correlation and privileged tagging eventually slowing you down.

We have been collaborating with our clients and partners to utilize the Rezonate platform to thoroughly investigate the security incident and assess its potential impact on all activities mentioned here. If you require assistance, please do not hesitate to contact us. Providing support to our clients and the community is a key purpose of Rezonate’s founding.